🚀 LazyLLMs: Revolutionizing AI Model Management in the Era of Generative AI The world of artificial intelligence is experiencing explosiv...

🚀 LazyLLMs: Revolutionizing AI Model Management in the Era of Generative AI

The world of artificial intelligence is experiencing explosive growth. If you're a developer or researcher working with AI models, you've probably faced the challenge of managing these increasingly complex systems. The numbers tell a compelling story - in just the past year, we've seen a staggering 233% increase in available open-source language models, while their average size has grown by 85%. This rapid expansion brings with it a host of challenges that every AI practitioner needs to address.

The AI Model Management Crisis

Let's talk about what's really happening in the AI landscape. Remember when running a simple neural network was considered complex? Today, we're dealing with models that require multiple GPUs and hundreds of gigabytes of RAM. The resource requirements are becoming increasingly demanding:

- A modest 7B parameter model needs at least 8GB of GPU VRAM and 16GB of RAM

- Moving up to a 13B model? You'll need 16GB of VRAM and 32GB of RAM

- And if you're working with larger 33B models, we're talking about 24GB of VRAM and 64GB of RAM

But it's not just about raw computing power. The real challenges emerge when you're trying to:

- Keep track of multiple model versions running simultaneously

- Monitor performance across different hardware configurations

- Manage temperature and resource utilization

- Prevent memory leaks and system crashes

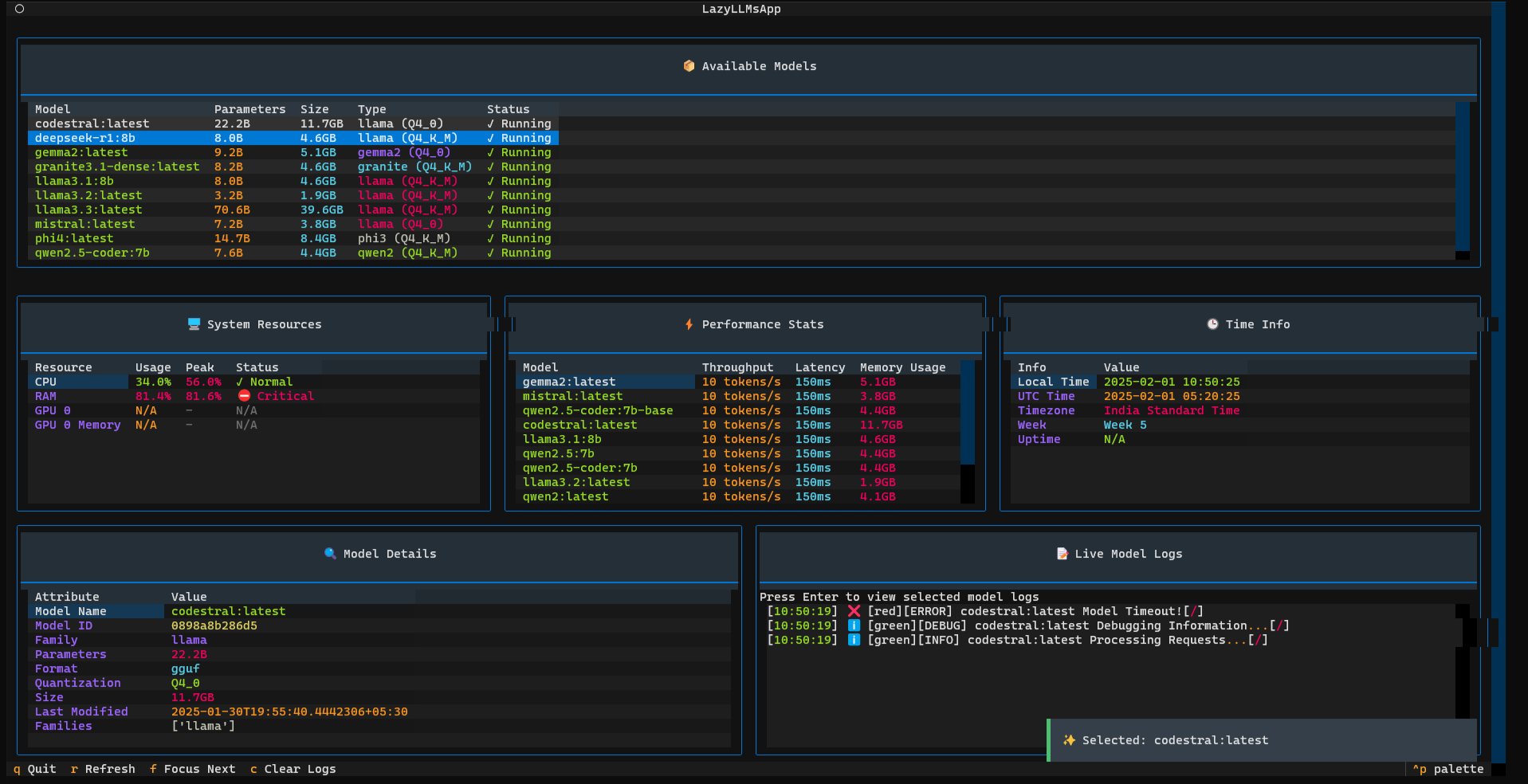

Enter LazyLLMs: A Fresh Approach to Model Management

This is where LazyLLMs comes in - a tool that's changing how we interact with AI models. Think of it as your mission control center for AI operations. Rather than juggling multiple terminal windows and monitoring tools, LazyLLMs brings everything together in one intuitive terminal interface.

What makes LazyLLMs special? Let's break it down:

📊 Real-Time Intelligence

LazyLLMs provides comprehensive monitoring that helps you understand exactly what's happening with your models:

Performance Metrics at a Glance:

- 🔡 GPU Temperature: Keep it between 30-75°C for optimal performance

- 📈 GPU Usage: Aim for 70-90% for efficient utilization

- 📊 VRAM Usage: Stay within 60-85% for stable operation

- 💡 CPU Usage: Maintain between 50-80% for balanced performance

🤖 Smart Model Management

The system supports a wide range of models:

- Language Models: From LLaMA to Mistral and Gemma

- Image Generation: Stable Diffusion and ControlNet

- Video Creation: AnimateDiff and related models

But it's not just about support - it's about smart management. LazyLLMs actively monitors your system and alerts you before problems arise. When your GPU temperature approaches 85°C or your VRAM usage exceeds 93%, you'll know it's time to take action.

Real-World Benefits

For Developers:

- Rapidly prototype and test different models without resource conflicts

- Identify performance bottlenecks before they impact your applications

- Monitor resource usage in real-time during development

For Researchers:

- Compare model performance across different configurations

- Track experiments with detailed resource utilization data

- Maintain stable environments for long-running tests

For Production Environments:

- Ensure stable model operation with proactive monitoring

- Optimize resource allocation across multiple models

- Respond quickly to potential issues before they affect service

The Future of Model Management

As AI continues to evolve, tools like LazyLLMs become increasingly essential. We're seeing models grow not just in size but in complexity. The future roadmap for LazyLLMs includes:

- Enhanced monitoring capabilities with predictive analytics

- Support for distributed systems and cloud environments

- Advanced visualization tools for better insight into model behavior

- Integration with popular cloud providers and containerized environments

Getting Started

Starting with LazyLLMs is straightforward. You don't need to be a system administrator or DevOps expert - the tool is designed to be intuitive while providing powerful capabilities. The interface responds to simple keyboard commands, and the monitoring system works right out of the box.

The Community Factor

One of the most exciting aspects of LazyLLMs is its growing community. Users are constantly sharing new configurations, best practices, and innovative ways to manage AI models. This collaborative approach ensures that the tool evolves alongside the rapidly changing AI landscape.

Conclusion

As AI models continue to grow in both size and complexity, having the right tools for management becomes crucial. LazyLLMs isn't just another monitoring tool - it's a comprehensive solution that makes AI model management accessible and efficient. Whether you're developing cutting-edge applications, conducting research, or managing production systems, LazyLLMs provides the visibility and control you need to work effectively with modern AI models.

The future of AI is exciting, but it's also increasingly complex. Tools like LazyLLMs help us navigate this complexity, allowing us to focus on innovation rather than infrastructure management. As we continue to push the boundaries of what's possible with AI, having reliable, efficient management tools becomes not just useful, but essential.

No comments